Questmist.com: An Online Dream Journal and Community - Share Your Dreams, Get Paid

Introduction

In today's digital age, dreams are no longer confined to the subconscious; they can now be recorded, shared, and monetized. Questmist.com offers a unique platform where users can maintain an online dream journal, share their dreams with a community, and even get paid. This article delves into the myriad aspects of Questmist.com, exploring its features, benefits, and how it stands out in the digital landscape.

What is Questmist.com?

Questmist.com is an innovative online platform designed for dream enthusiasts. It allows users to document their dreams in a personal journal, share them with a vibrant community, and earn money through various engagement methods. Whether you're a casual dreamer or someone who deeply analyzes their dreams, Questmist.com offers a space to explore and monetize your nocturnal narratives.

The Importance of Dream Journaling

Dream journaling is not just a pastime; it’s a powerful tool for self-discovery and mental health. Keeping a dream journal can help individuals:

- Gain insights into their subconscious mind

- Identify recurring themes or patterns

- Improve memory and recall skills

- Foster creativity and problem-solving abilities

Questmist.com enhances these benefits by providing a structured and interactive platform for dream journaling.

Key Features of Questmist.com

User-Friendly Interface

Questmist.com boasts a user-friendly interface that makes it easy to document and navigate your dreams. The intuitive design ensures that even beginners can start their dream journal without any hassle.

Privacy and Sharing Options

Users can choose to keep their dreams private or share them with the community. This flexibility ensures that you can control your dream journal's privacy settings according to your comfort level.

Community Engagement

The platform fosters a strong sense of community by allowing users to comment on and discuss shared dreams. This interaction can lead to deeper interpretations and a richer understanding of one's dreams.

Earning Opportunities

One of the standout features of Questmist.com is the ability to earn money by sharing your dreams. Through a variety of engagement options, such as likes, comments, and shares, users can monetize their dream content.

Advanced Dream Analysis Tools

Questmist.com provides tools to help users analyze their dreams more effectively. These tools can include keyword tagging, thematic categorization, and even AI-generated insights based on dream content.

How to Get Started on Questmist.com

Creating an Account

Getting started on Questmist.com is straightforward. Users can sign up using their email or social media accounts. The registration process is quick, ensuring that you can start journaling your dreams without delay.

Setting Up Your Dream Journal

Once registered, users can set up their dream journal by customizing their profile, choosing privacy settings, and starting their first dream entry. The platform provides prompts and tips to help you accurately record your dreams.

Sharing and Earning

Users can share their dreams with the community by selecting the public option when making an entry. The more engagement your dreams receive, the higher the earning potential. Questmist.com offers various payout methods, ensuring that you can easily access your earnings.

The Science Behind Dream Journaling

Dreams have fascinated humans for centuries, and modern science continues to explore their significance. Dream journaling, supported by platforms like Questmist.com, can:

- Enhance cognitive functions such as memory and creativity

- Provide psychological insights that can aid in mental health treatments

- Serve as a therapeutic tool for processing emotions and experiences

Benefits of Using Questmist.com

For Individuals

- **Self-Discovery**: Gain deeper insights into your subconscious mind.

- **Community Support**: Engage with a community that shares your interest in dreams.

- **Monetary Gain**: Earn money for sharing your dream experiences.

For Researchers

- **Data Collection**: Access a vast database of dream journals for research purposes.

- **Pattern Analysis**: Utilize advanced tools to analyze common themes and patterns in dreams.

For Creatives

- **Inspiration**: Use dreams as a source of inspiration for artistic and creative projects.

- **Collaboration**: Connect with other creatives who draw inspiration from their dreams.

Community and User Stories

Real-Life Impact

Numerous users have shared how Questmist.com has impacted their lives. For some, it’s a therapeutic outlet, while for others, it’s a source of inspiration for their creative projects.

Case Studies

Case studies of users who have successfully monetized their dream journals can provide motivation and practical insights for new users.

Expert Insights

Psychological Perspectives

Psychologists and dream analysts provide insights into the importance of dream journaling and how platforms like Questmist.com can aid in self-discovery and mental health.

Technology and Dream Analysis

Experts discuss how modern technology, integrated into platforms like Questmist.com, enhances the traditional practice of dream journaling with advanced analysis tools.

Conclusion

Questmist.com is revolutionizing the way we perceive and interact with our dreams. By offering a platform that combines dream journaling, community engagement, and monetization, it caters to a wide range of users, from casual dreamers to serious researchers. Whether you're looking to explore your subconscious or earn money by sharing your dreams, Questmist.com provides the tools and community support you need.

![A user video conferences via tablet. [teleconference / online meeting / digital event]](https://images.idgesg.net/images/article/2020/09/user_video_conferencing_via_tablet_teleconference_online_meeting_digital_event_participant_by_alvarez_gettyimages-1218764252_2400x1600-100858484-large.jpg)

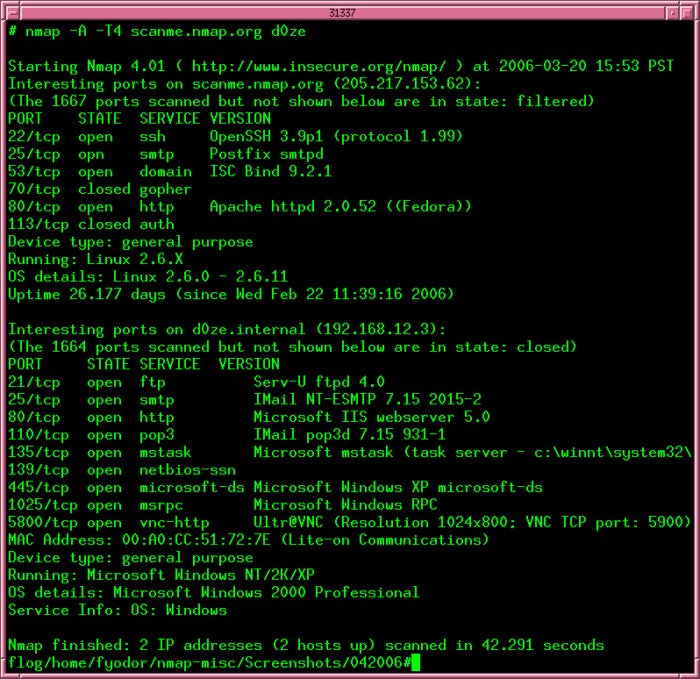

Nmap.org

Nmap.org